Server Roundup: Where They’ve Been and Where They’re Going

Computer servers have been in use since the 1980s. They came into being as the computing world shifted from mainframes and minicomputers to distributed PC networks.

History and Characterization

That development led to a scare for IBM, the original developer of the PC, as the world migrated to PCs and networks, diminishing the role of mainframes. The IBM PC-AT opened a Pandora’s box of decentralized computing and the development of PC/Mac hardware and software. Computing no longer required ― and to a great extent eschewed ― the centralized system controlled by a few. One could argue that the arrival of the PC was a powerful existential experience for the now-unshackled worker. Many companies, including Digital Equipment, Data General, Wang, Burroughs, and others did not survive this massive transition. They may not have anticipated that the PC revolution and the (future) Internet would enable an even larger ”mainframe”/minicomputer ecosystem called servers.

IBM rode through that turmoil and emerged as the leading server manufacturer, and is still in the mainframe business. Interestingly, IBM also invented the PC, but could not deal with it within a corporate culture tied to the profitability of the mainframe. IBM’s PC division lost more than $1 billion in its last four years of existence (2001-05) because it could not generate the margins IBM needed to support its high-cost corporate structure, and it suffered from too much competition.

The IBM PC business was sold to China’s Lenovo in 2005. Lenovo, with the help of former IBM execs, has moved the business into the #3 position in the PC market, behind HP and Dell, and outpacing Acer for that slot. But long before that, IBM had developed the open system PC-AT architecture, and in 1980 it paid Bill Gates and Paul Allen to develop the original MS-DOS operating system. Microsoft later developed MS-DOS into Windows, which, if it had combined with the PC, would have been highly profitable. I actually know a few of the IBMers involved in the PC scenario, all top-notch people instilled right out of college into the IBM management mode. I was once given the opportunity to make a growth presentation on the PC to IBM in Boca Raton, Fla. That facility is still there, doing other things, but it’s not the huge complex IBM originally envisioned. The PC ”company” moved on to North Carolina and then China. I sometimes drive by the Boca facility, musing about what could have been. But IBM is to be complimented for its agility during that massive change in the industry.

Now, on to the server. The basic difference between a mainframe and server is that a mainframe is a centralized source of computing power connected to dumb terminals that access data from the mainframe. This was how the world was: The all-powerful and all-knowing mainframe run by equally powerful IT departments, who in turn got their instructions from IBM account execs and others (I mean this tongue-in-cheek, by the way). The server is what its name describes: a sophisticated piece of computer hardware serving the diverse needs of users ― or a network of servers ― via PCs, networks, and an Internet-centric universe. Servers don’t necessarily have the raw computing power of a mainframe, but they are designed to provide relatively foolproof services to users. Literally millions of pieces of IT hardware act as web servers worldwide.

Types of Servers

There are many different types of servers, and their applications fall into several different categories: corporate and departmental servers, e-commerce servers, web servers, etc. Server hardware falls into many different and increasingly complex categories:

- Big box/enterprise servers

- Desk-side departmental servers

- Equipment room rack and blade servers

- Application-specific servers

- Servers manufactured by OEMs such as IBM and HP

- Servers built from the ground up by large users such as Google

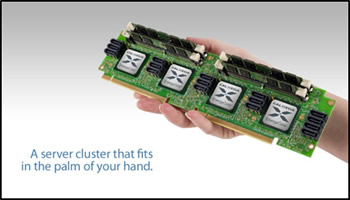

- New micro-server architecture based on very low power chips

- Virtualization servers that provide multiple operating systems in one server platform

Some primary characteristics of servers include:

- Some use of commodity hardware cloned from the PC business

- Others use of higher-reliability hardware procured with strict vendor controls

- Redundant power supplies, storage, and logic

- Large banks of memory and disk storage

- Error detection and correction

- High power consumption with substantial cooling systems

- High IO capability in order to serve multiple clients over a network

- High-speed network access: 1 – 10 – 40Gb/s Ethernet, etc.

It is estimated that server farms and other server facilities consume as much as 5% of the developed world’s electricity. Thus major efforts are under way to reduce power consumption, including the advent of micro-server platforms that use low-power Intel Atom, Sandy Bridge, ARM, and other chips consuming less than 15W, as opposed to Intel’s Xeon, which consumes 45-50W. This trend in power management is much like what is happening in the mobile computer segment ― and some blade servers use packaging similar to a notebook PC, but with much higher thermal mitigation.

In addition, servers can be run on several different operating systems, including Unix, Linux, Windows NT, or proprietary OS from HP, IBM, Oracle, and others. Application servers allow access to specific applications, so that the number of different types of servers has proliferated. That makes this computer market segment particularly diverse and somewhat confusing. The result is, perhaps, a full-circle return to the days of the all-powerful IT department, but one that now manages huge diversity rather than going for a one-size-fits-all approach.

The Server Market:

IT market research company Gartner Inc. says the worldwide server market saw a 7.9% increase in revenues in 2011, and 7.0% in unit shipments. Fourth-quarter units increased 4.5%, while revenues declined 5.4%.

- The server industry is led in revenues by IBM (33.7%), HP (26.9%), Dell (14.6%), Oracle-Sun (5.3%), and Fujitsu (formerly Fujitsu-Siemens) (3.6%), with all others comprising 15.8%. Among all others are Asian suppliers and several strong second-tier players.

- In 2011, the market saw $52.8 billion in worldwide revenues and 9.52 million units shipped for an ASP of $5,544 per system.

- This compares to $48.9 billion and 8.9 million units in 2010 for an ASP of $5,499. The 2010-11 delta was +7.9% in dollars and +7.0% in units.

- In unit volume, HP led with 2.8 million, followed by Dell with 2.1 million, IBM with 1.2 million, Fujitsu with 0.3 million, and, for the first time on the list, Lenovo with 0.2 million.

- 2012 is expected to continue with mixed results as the EU goes through the painful solutions to debt crises in Greece, Italy, Spain, and elsewhere. Offsetting this downer will be increases in X86 servers, server virtualization, and growing demand in China, India, and elsewhere in the developing world.

Thus, the server market should remain a mid-to-high single-digit growth, cyclical market for the foreseeable future. Like the PC, servers have become a necessary appliance in information technology. They will see increased demand as the developing world ecosystems expand and as new server designs and applications develop. This includes the largely untapped market for IP media servers in the home. Unlike the PC or iPad, servers have been a business appliance not directly affected by consumer demand. But as more and more consumer applications emerge, more servers will be needed.

Market Dynamics

The server market is relatively concentrated, with about half a dozen significant hardware players and a few dominant operating systems. Microprocessor technology is dominated by Intel, but has competition from AMD and elsewhere. Most recently, ARM designs have entered the market with very low-power multicore chips that can be cascaded into the hundreds of micro-CPUs. Intel has countering with its Atom CPU. In addition, there are new venture entrants in the server space that focus on technical innovation in chips, server designs, and power consumption. The most notable relatively new applications of server technology are small, very low-power server blades, low-power micro-servers, cloud servers, and server virtualization.

Historically, the market was dominated by proprietary operating systems based on the robust Unix OS and the proprietary OS of companies like IBM and Sun (now Oracle). However, Microsoft’s X86 operating system with Windows NT and Windows Server 2012 offer a less expensive, more standardized server configuration, somewhat commoditizing the lower end of the server market. It was anticipated that x86 servers would bury its proprietary predecessors, but that has not happened, probably due to heavy investments in programming and known reliability. Thus, X86 has grown and expanded the market. And, many servers are to a large degree mission-critical, where a failure can cost more than the system itself. Therefore, most server systems are designed with higher cost and more reliable and tightly controlled components, often with redundancy built in. Since the market is heavily business-oriented, it is more sensitive to business cycles and careful in its selection process. The server market sees significant impact in recessionary years, particularly at the high end, as businesses delay major expenditures. During the past decade, consumer demand for mobile PCs and some other CE devices has outpaced server growth and defied economic cycles.

Server Innovations

There continues to be innovation in this market segment ― some from software and OS upgrades, others from main hardware vendors, chip suppliers, and startups in the server market space. Current trends toward micro-server technology may result in a sea change, but the trends are more likely to open up new server applications. The biggest threat to server hardware, including connectors, may come from more highly integrated designs with streamlined circuitry, and from server virtualization, which can replace multiple OS servers with one universal unit. There’s always a major push toward lower power consumption and moves to micro-server systems, with Intel, AMD, ARM and possibly Samsung in the mix. Use of SSDs is also increasing and is expected to complement rather than replace HDD technology. Here are some recent developments:

ARM and Intel Atom: Low-power chips that can provide massive multi-core performance within a rack/blade server

ARM and Intel Atom: Low-power chips that can provide massive multi-core performance within a rack/blade server

- Calxeda: Server-on-Chip ARM Server with 10 GbE, PCIe, and SATA support (pictured at right)

- SeaMicro: Low-power micro-server systems using AMD Opteron and Intel Atom low-power CPUs. SeaMicro has been acquired by AMD for $334 million.

- IBM and others: Low-cost Xeon servers with solid state disks: IBM x3650 M4 Express: $2,749 2U Rack Server 2 CPU Sockets, < 24 DIMM 768Gb Memory Slots, (16) 2.5-inch, (6) 3.5-inch SAS/SATA HDDs, or (32) 1.8-inch SSDs with < 18TB storage, (4×1) GbE, (4-6) PCIe, (4) USB

- SuperMicro: Micro-server rack systems (below) Intel Xeon LGA 1155 Socket

2X SATA3 3.5” HDD 6Gbps, < 32GB DDR3 ECC UDIMMs 4X, 2X GbE

- SuperMicro X9SRA Server Motherboard

1X LGA 2011 support for Xeon E5

< 256GB RDIMM or 64GB UDIMM 8X sockets

(5) PCIe slots

(2X) GbE

(2) SATA3, (4) SATA

(4X) USB 3.0, (14) USB 2.0

DOM power connector

Implications for the Connector Market

The $50 billion-plus server market represents a $1.4 billion world market for connectors. Key connector products include:

- CPU sockets ― multiples/system

- Memory sockets — more with 64 bit systems

- System bus connectors ― PCIe, Infiniband, others

- Backplane connectors ― from compact PCI to high-performance/high-speed backplane connectors

- Mezzanine card connectors

- SAS/SATA HDD and SSD connectors ― trending toward new high-speed designs

- Electronic Power/blade connectors

- High-performance copper and fiber optic connectors and cable assemblies

- Multiple IO connectors, including 1-10Gb LAN interfaces, trending toward 40gbe

- Miles of rack and equipment room cabling

- Depending on OEM, entry-level/x-86 servers are more likely to be outsourced while higher-end servers are made in-house

- Big companies like IBM and HP make their own, small companies and startups outsource assembly

Server design and manufacturing is global, but is more concentrated in North America, with pockets of excellence in Japan, Europe, and Taiwan. As with the PC, there is significant outsourcing, but it tends to be more regional, where most PC manufacturing is now in Asia. The server market in China is growing rapidly, as is its connector market. X86 server designs are more likely to be assembled in Asia, with Taiwan a leading player.

We anticipate that increasing use of in-system photonic circuitry will happen in the server area. Enterprise servers, along with Scientific and other HPCCs, could be the platform where on-chip optical interconnect happens in volume, moving inward from SFP/QSFP interconnects and fixed FO cables, and outward from the CPU. Servers with multiple CPUs and massive memory should provide an excellent platform for on-chip/on-board fiber optics, including the use of polymer waveguides or optical traces/ribbons on PCB. Cu systems continue to amaze, well into the Gbits. High-speed networking to 40 and 100Gb/s will be a mix between Cu and FO. 25Gbps/channel seems to be the x-over point, but that could extend to 40Gb for short distances. System design and PCB technology are critical here.

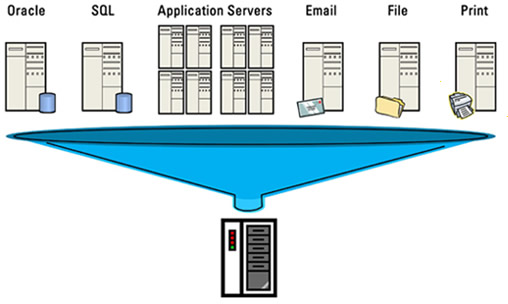

Hardware Issue with Virtualization

According to Energy Star/EPA, a virtual server is a software implementation of a server that executes programs like a real server. Virtualization is a method of running multiple independent servers on a single physical server. Instead of operating many servers at low utilization, virtualization combines the processing power onto fewer servers that operate at higher utilization.

Source: US Environmental Protection Agency

Virtualization can drastically reduce the number of servers in a data center, thus decreasing power consumption and wasted heat, and consequently the size of the necessary cooling equipment. Some investment in software and hardware may be required to implement virtualization, but it is usually modest compared to the savings achieved with a reduced need for hardware.

With today’s servers, consolidation ratios in the 10:1 to 15:1 range can be achieved without placing stress on server resources. Thus, this may represent a significant issue for server volume going forward depending on the rate and scope of penetration. We will get a better handle on what this means to future server volumes ahead of our late 2012 server market report.

No part of this article may be used without the permission of Bishop & Associates Inc.

If you would like to subscribe to the Connector Industry Forecast, go to connectorindustry.com and select “Research Reports.” You may also contact us at [email protected], or by calling 630.443.2702.

- Electric Vehicles Move into the Mainstream with New EV Battery Technologies - September 7, 2021

- The Dynamic Server Market Reflects Ongoing Innovation in Computing - June 1, 2021

- The Electronics Industry Starts to Ease Out of China - November 3, 2020