Intra-Data Center Optics

Fiber optics are poised to enable network functionality like never before with increased data rates and bandwidth inside the data center.

The need to change the data center network from a hierarchal one to a leaf-spine or more meshed one has arisen from the ever-increasing need for compute and storage access and the use of server virtualization. Now that many applications can run on one server, if that server gets overloaded, virtual machines (applications) need to move to another server quickly; four hops through the network is not quick, so network architecture is changing to accommodate the mobility of virtual machines (VMs). Now, what used to be just a client-server standard three-tier network is starting to look like an HPC cluster.

The need to change the data center network from a hierarchal one to a leaf-spine or more meshed one has arisen from the ever-increasing need for compute and storage access and the use of server virtualization. Now that many applications can run on one server, if that server gets overloaded, virtual machines (applications) need to move to another server quickly; four hops through the network is not quick, so network architecture is changing to accommodate the mobility of virtual machines (VMs). Now, what used to be just a client-server standard three-tier network is starting to look like an HPC cluster.

The need for server density can be achieved with blade servers and, while they have not been adopted as quickly as anticipated, their installation rate will increase over the next five years. The use of blade servers will help facilitate mobility of VMs as well as the transition into a software-defined network (SDN) model. With SDN, the idea of top-of-rack (ToR) or end-of-row (EoR) switching architectures will become obsolete and the SDN controller will be incorporated into the software on the off-the-shelf server. The full implementation of this, however, will take many years; in the interim, we still see healthy growth of both ToR and EoR architectures.

Data center Ethernet server connections are currently transitioning from Gigabit Ethernet to 10G, which in turn is pushing the access, aggregation/distribution, and core switches to 40G and 100G. In 2014, the majority of data center servers were still connected with 1G Ethernet, but in 2015, the majority will become 10G. SFP optical transceiver modules are used for Gigabit Ethernet connections to servers, though most of the server connections will remain copper RJ45 ones. As they move to 10G, the majority will remain copper with either RJ45 CAT6A or SFP+ DACs.

With Ethernet data rate progression fueled by server network connection upgrades and access switch locations closer to servers via ToR or EoR configurations, the aggregation/distribution portion of the network is transitioning from copper to fiber. Actually, this phenomenon started with 1G connections at servers because the 10GBASE-T 100m (or more than 10m) switch-port took so long to materialize. But now, with 10GBASE-T or 10GBASE-CR4 connections at the server, the uplinks are either multiple 10GBASE-SR or 40GBASE-SR4. This is where fiber will gain most of its momentum.

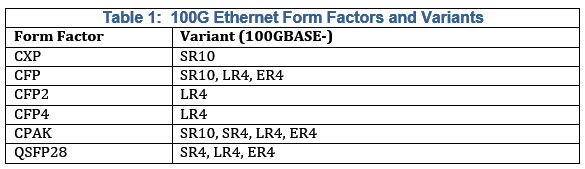

While these links will be mostly 10G or multiple 10Gs to start, by 2019 there will be a healthy market for 40G connections in this part of the network. Data center 10G optical transceivers will use SFP+. The CFP and QSFP+ will be used for 40G, but QSFP+ is expected to take over in the long term. For 100G optical transceivers, there are six form factors – CXP, CFP, CFP2, CFP4, CPAK, and QSFP28. Since Cisco owns and makes the CPAK, it will get a large share of the market over the next five years. However, the QSFP28 is expected to dominate switch connections from other equipment manufacturers. In fact, many of the top transceiver manufacturers have decided only to support certain variants in some of these form factors because they see them as transient products. The table below shows the plan.

Short-reach optical variants will continue to dominate the data center since the majority of connections are less than 50m. However, there is still a need for a cost-effective option for those few links that are longer than 150m. There are four MSAs now vying for this space – 100G CLR4 Alliance, CWDM4, OpenOptics, and PSM4. This represents the positioning of the vendor community when addressing this part of the market. It is completely up in the air as to which solution will ultimately win because none have shown a compelling cost advantage over the existing LR4 variant or each other. Regardless of the ultimate implementation, the form factor will remain one of those listed in the table above so connectivity will remain the same.

Another emerging trend is to develop a 25G connection for ToR (or EoR for that matter) switch-to-server. Microsoft spearheaded this effort and has succeeded in getting an IEEE task force to develop this standard (IEEE 802.3by). We believe that this will take hold in large Internet data centers (IDCs) within the next five years. In fact, Microsoft, Mellanox, Arista Networks, Broadcom, and Google formed a 25G Ethernet Consortium to move this along quickly. Once these products are available, IDCs are expected to move to 25G connections on servers and 100G in the aggregation network.

Data Center Optics Market Dynamics

With 10G dominating for the next few years, transceiver manufacturers finally have an opportunity to recoup all of their long-needed development dollars. However, before they can recover resources spent on 40G and 100G, they will be spending on 400G. It seems to be a neverending conundrum for them. And, to make it worse, at 100G, they could be losing a good portion of their anticipated revenue to one of their biggest customers—Cisco.

The CPAK module presents a dilemma for transceiver manufacturers – it will fracture the 100G market. Cisco commands more than 60% of the data center switching market, so if it uses its CPAK module exclusively, transceiver suppliers will have a drastically reduced revenue stream. Cisco has publicly stated that it intends to adopt other 100G form factors, but we are skeptical that this will happen quickly, evidenced by the fact that all of its Nexus line now includes CPAK ports.

The other interesting strategy Cisco seems to have employed is that it does not intend to release 100G line cards for its tried-and-true Catalyst 6500-series product line. These have been the workhorse EoR switches for data centers for more than 10 years now. This will force data center networking engineers to either adopt something from the Nexus line to stay with Cisco, or move to another switch manufacturer. In fact, some network managers recently interviewed are moving away from Cisco altogether for their Ethernet networks because of this. Regardless, optical transceiver suppliers will now be vying for a much smaller piece of the pie at 100G. In addition, data center managers that stay with Cisco now have a single source for optical modules, which could be troublesome when demand gets high.

Below is a table that shows the top transceiver suppliers and their current 10G-and-above commercial products.

Three suppliers—Avago Technologies, Finisar, and JDSU—have dominated the data communications optical transceiver market for a long time. In recent years, Avago and Finisar have been jockeying for number one and number two, but they now tend to focus on different aspects of the market. Avago has leveraged its knowledge of semiconductors into developing its short-reach embedded technologies, while Finisar wants to be a one-stop shop for anything Ethernet. JDSU, while still a formidable player in this market, has changed its strategy to focus on the end-game form factor, as opposed to making all the steps along the way. For instance, while it was one of the first companies to have a CFP, it made a clear effort of saying that it would not commercialize it, but focus on the follow-on smaller form factors of CFP2, CFP4, and ultimately QSFP28.

There are some pure 40G and 100G-and-above players like ColorChip and Reflex Photonics that are leveraging their optical engines into viable standards-based products.

While the Fibre Channel data center market is substantial, it is really just incremental sales to the Ethernet data center market. And InfiniBand will gain ground in HPC and perhaps large-scale financial sector data centers, but will remain there for the foreseeable future.

What is interesting to note for connectivity providers is that the I/O connectors for data center optics will remain MPO, LC, and SC in the coming years, but the board-mount connectors may need to change based on higher data rates, signal integrity, and EMI concerns. In fact, we have already seen this from QSFP+ to QSFP28 to CDFP.