SC19: Supercomputers Continue to Evolve

The premier exhibition and conference for supercomputers demonstrated technologies including artificial intelligence, big data analytics, machine learning, and a rapidly expanding array of applications that require high performance computing services and high-performance connectivity products.

The market for supercomputers and extreme computing services has evolved from a few highly specialized machines to a huge industry that lives at the edge of advanced technology. Beginning with the Cray I machine announced in 1975, this highly specialized class of computers was tasked with the most complex problems that could only be solved using the most advanced technology. In the years that followed, next-generation machines using the latest chips and system packaging continued to increase performance to address ever more complex problems. Today, supercomputers are able to tackle such complex applications as visualizing the 3D network of neurons in a human brain. Measured in terms of quadrillions of floating-point operations per second (petaflops), these machines may incorporate thousands of processor cores and consume 100 kW of power.

SC19, the premier exhibition and conference for supercomputers, held in Denver, Colorado, November 17–22, showcased the latest advances in performance as well as the evolution of how supercomputing services can be delivered. With 13,338 registered attendees from 118 countries, this industry is truly international in scope. The list of organizations included domestic, European, and Asian universities as well as government-funded organizations. Approximately 374 exhibitors demonstrated technologies including artificial intelligence, big data analytics, machine learning, and a rapidly expanding array of applications that require high performance computing services. These developments are driving the supercomputer industry in at least two directions.

Traditionally, organizations that required supercomputing resources could set up their own facility to be used internally. Except for very large users such as government, aerospace, or medical research companies, this is a very expensive option, as the rapid advance of supercomputer technology can obsolete state-of-the-art machines within a few years. The alternative is to submit a problem and data set to a supercomputer facility managed by a university or commercial data center. Users can buy access to a supercomputer by the hour. A typical supplier may have several machines with scaled performance capable of matching the requirements of the client. These high-performance computers (HPC) typically consist of highly specialized servers and storage modules utilizing the most current general or graphics processor units, FPGAs, and accelerators. Suppliers compete in terms of processor density, cooling efficiency, power efficiency, expansion flexibility, and minimal footprint. SC19

Traditionally, organizations that required supercomputing resources could set up their own facility to be used internally. Except for very large users such as government, aerospace, or medical research companies, this is a very expensive option, as the rapid advance of supercomputer technology can obsolete state-of-the-art machines within a few years. The alternative is to submit a problem and data set to a supercomputer facility managed by a university or commercial data center. Users can buy access to a supercomputer by the hour. A typical supplier may have several machines with scaled performance capable of matching the requirements of the client. These high-performance computers (HPC) typically consist of highly specialized servers and storage modules utilizing the most current general or graphics processor units, FPGAs, and accelerators. Suppliers compete in terms of processor density, cooling efficiency, power efficiency, expansion flexibility, and minimal footprint. SC19

Design of these systems results in exceptionally large blade servers, using multiple packaging techniques including backplane, mezzanine, and twinaxial cable assemblies.

Operating so many high-performance processor cores in a cramped 2U envelope creates immense cooling challenges. Multiple suppliers demonstrated thermal management strategies including walls of fans, a water-cooled system for chilling high-speed airflow, thermal heat pipes, water cooled cold plates, and total immersion in a closed loop fluoinert liquid.

Similar to how cloud computing has recently dominated the commercial computing environment, high-performance computing has also begun migrating to the cloud. As the market for HPC hardware becomes more price competitive and driven by open design architectures such as Open 19, several hardware manufacturers have embraced cloud-based delivery of HPC services. Penguin computing, a large manufacturer of HPC servers, storage, and network switches, now offers HPC as a service via their Penguin Computing On-Demand (POD) program. Companies such as Rescale offer access to supercomputing resources that can be scaled to a specific application, and even down to the number of cores to be applied to a specific job. A user can instantly access nearly limitless computing power to address diverse requirements in modeling, simulation, and analysis on a pay-per-use basis. The need for constantly upgrading in-house computers and maintaining support staff is eliminated. Providers of HPC cloud services address demand for absolute data security with ITAR and FedRAMP certification.

Similar to how cloud computing has recently dominated the commercial computing environment, high-performance computing has also begun migrating to the cloud. As the market for HPC hardware becomes more price competitive and driven by open design architectures such as Open 19, several hardware manufacturers have embraced cloud-based delivery of HPC services. Penguin computing, a large manufacturer of HPC servers, storage, and network switches, now offers HPC as a service via their Penguin Computing On-Demand (POD) program. Companies such as Rescale offer access to supercomputing resources that can be scaled to a specific application, and even down to the number of cores to be applied to a specific job. A user can instantly access nearly limitless computing power to address diverse requirements in modeling, simulation, and analysis on a pay-per-use basis. The need for constantly upgrading in-house computers and maintaining support staff is eliminated. Providers of HPC cloud services address demand for absolute data security with ITAR and FedRAMP certification.

The implications of HPC cloud services could have a significant impact on the electronic connector industry. As more users eliminate in-house HPC resources, fewer machines will be purchased, reducing sales of HPC related connectors. Large commercial data centers will constantly update their capacity to support their cloud business. These machines will likely require the highest performance interconnects available, but the relatively few large data centers will result in limited sales of state-of-the-art copper and fiber interconnects.

Evolving interconnect protocols that define a specific connector are also changing. PCIe has been the gold standard for many years and has been constantly upgraded over the years to support higher speeds. The introduction of next-generation graphic processing units (GPUs) has made memory bandwidth a system performance gating factor. More recently, introduced standards such as GenZ and CXL are changing the landscape by offering higher bandwidth, reduced latency, and greater design flexibility.

SC19 is an annual event and will move to Atlanta in 2020. Since this is a system level rather than component level conference, relatively few connector manufacturers participate. The exceptions this year were I-PEX, Molex, and Samtec.

I-PEX announced a new embedded optical module that features four duplex 25Gb/s channels with the capacity to support up to 300-meter lengths. The electrical connection to the PCB uses their CABLINE-CA low profile connector. This mid-board transceiver features a pigtail fiber termination.

I-PEX announced a new embedded optical module that features four duplex 25Gb/s channels with the capacity to support up to 300-meter lengths. The electrical connection to the PCB uses their CABLINE-CA low profile connector. This mid-board transceiver features a pigtail fiber termination.

The Molex booth featured NearStack micro cable assemblies that are rated to 112Gb/s PAM4. They also displayed a large production cable backplane assembly using their flagship Impact family of high-speed backplane connectors. SC19

The Molex booth featured NearStack micro cable assemblies that are rated to 112Gb/s PAM4. They also displayed a large production cable backplane assembly using their flagship Impact family of high-speed backplane connectors. SC19

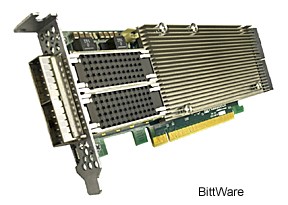

The primary Molex emphasis was on recently acquired Bittware products, including accelerators and storage processors that are prime components used in HPC equipment.

The primary Molex emphasis was on recently acquired Bittware products, including accelerators and storage processors that are prime components used in HPC equipment.

The large Samtec booth featured a series of high-speed connector displays, including 56Gb/s PAM4 direct connect to a silicon package, 112Gb/s PAM4 front panel to mid-board to backplane, as well as a demonstration of next-gen data center architectures by eSilicon and Samtec.

The large Samtec booth featured a series of high-speed connector displays, including 56Gb/s PAM4 direct connect to a silicon package, 112Gb/s PAM4 front panel to mid-board to backplane, as well as a demonstration of next-gen data center architectures by eSilicon and Samtec.

Technologies and products demonstrated at SC19 may provide some useful insight into the challenges that commercial and even consumer-level products will face within the next five years. Thermal management has already become a major design consideration in commercial products, but economics will not allow solutions that involve circulating cold water or cooling stacks and heat pipes that are six inches high. Chip manufacturers have made great progress in reducing the power consumption of processors, but increased core counts will continue to drive power consumption up.

In the short to intermediate term, PAM4 signaling will enable designers to utilize well-documented copper interconnects. Other than standard pluggable I/O cable assemblies that were displayed by a host of domestic and international suppliers, there was very little evidence of optical interconnects at SC19. Growth of edge computing spurred by local IoT applications may provide new opportunities for longer reach optical links. Broad adoption of optical interconnects, both in I/O and even inside the box applications, may become necessary when systems require greater than 56Gb/s PAM4.

Like this article? Check out our other New Technology, high-speed, and data center articles, our Datacom/Telecom Market Page, and our 2019 Article Archive.

- Optics Outpace Copper at OFC 2024 - April 16, 2024

- Digital Lighting Enhances your Theatrical Experience - March 5, 2024

- DesignCon 2024 in Review - February 13, 2024